Why We Built an AI Price Testing Engine

Pricing is one of the few levers in Shopify ecommerce that can change profit today, but most stores still run it like a spreadsheet. A number gets set, a promo gets layered on top, and everyone watches revenue like it explains what is happening. It rarely does.

Once a catalog grows, static pricing starts to crack as the environment changes faster than the pricing logic. The same SKU behaves differently depending on who is buying, what else is in the cart, which channel they came from, and whether they have seen three discounts in the last two weeks. Multiply that by thousands of products, and pricing turns into an operating mess: old prices that never get revisited, discount habits that become permanent, and decisions made on instinct because there is no clean way to test.

This is where even strong teams get trapped. They copy competitors and slide into a race to the bottom, or they build rule sets that look disciplined until real customer behavior breaks them. Spreadsheets can show you what changed, but they never tell you what to do next. Dashboards can confirm the outcome, but they rarely explain what caused it. So you reach for the one lever that feels controllable in the moment, and you cut the price just to keep pace.

That is why we built an AI price testing engine, to make pricing measurable the way conversion optimization is measurable. It runs on real order and customer data, then designs controlled tests that show how price shapes demand, conversion, margin, and lifetime value, product by product, segment by segment, and situation by situation. The goal is learning, not automation for its own sake.

For Shopify stores, this problem gets worse as you scale: discount codes pile up, apps introduce incentives you forget to audit, Shopify subscription systems and bundle logic shift the effective price, and Shopify Markets adds regional context that changes willingness to pay. In that environment, the price you think you are charging is often not the price customers experience. That is why this is not a repricing bot. It does not chase the market. It is not a discount machine built to force volume. It is pricing intelligence designed to surface clean tests and defensible decisions so pricing stops being a debate and becomes a system that gets smarter every time you use it.

What Makes AI Price Testing Fundamentally Different

Most pricing tools are built to react. A competitor drops their price, conversion softens, inventory shifts, and the system responds by moving numbers up or down. It looks intelligent because it moves quickly, but it is still chasing the surface of the problem. You get activity, not understanding.

AI price testing is built for a different job. Instead of asking what price to show right now, it asks what the price does to behavior when you change it under controlled conditions. The system treats pricing like a learning loop. You test a move in a defined slice of demand, watch what happens, and then decide with evidence instead of instinct.

Dynamic pricing is optimization without explanation. On Shopify, it often turns into reactive discounting, because it is the fastest lever in the admin and the easiest thing to justify when performance gets tense.

AI price testing is experimentation with memory. It isolates variables so you can see whether conversion changed because of price or because the market got noisy. It measures what happens beyond the checkout, whether the order composition shifts, whether discounts stop working, whether repeat customers behave differently, and whether margin improves without the volume drop you were warned about.

That is why this approach prioritizes controlled experimentation over reactive repricing. Tests are scoped, timed, and measured with guardrails, and in early pilots we usually cap exposure to a defined slice of traffic or orders first, then expand only after the signal holds.

The real advantage is that it builds confidence over time. Pricing stops being a collection of opinions and starts becoming a record of observed behavior: which products are truly price-sensitive, which customers barely react, which discounts create lift versus noise, which price increases hold, and exactly where profit is being left on the table.

Profit is the point. Revenue can be manufactured with discounts, and that is why so many pricing tools optimize for it. AI price testing stays focused on margin, conversion quality, and long-term value, because those are the outcomes that survive after the promotion ends.

Data Inputs: What the System Analyses

Before the system can tell you what to test or change, it has to understand how pricing behaves inside your business. Price only has meaning in context. A number can seem expensive, fair, or invisible depending on who sees it, when, and what’s going on.

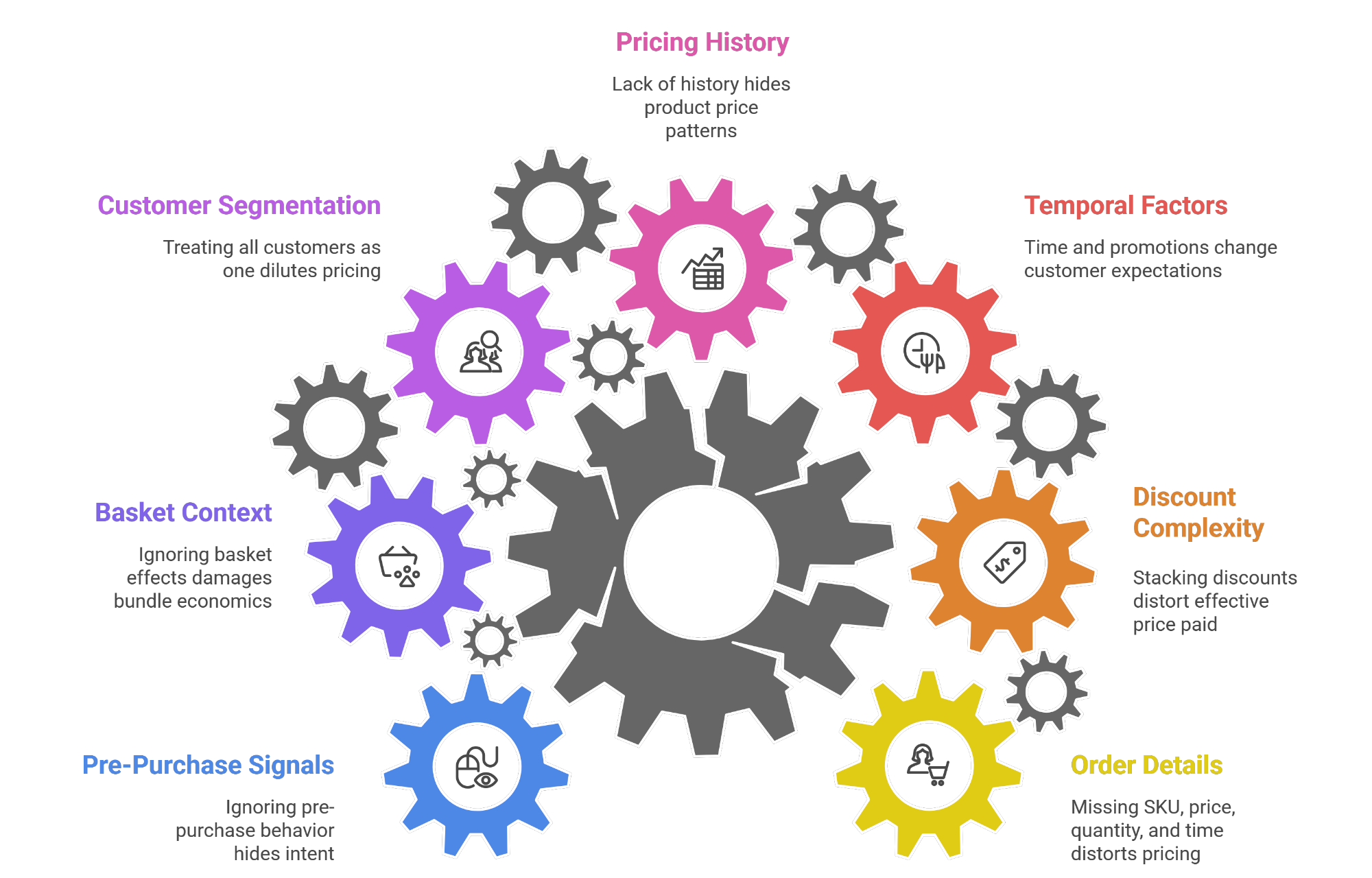

That is why the engine starts with order-level reality. Every transaction carries details that pricing decisions depend on: which SKU was sold, the exact price paid, the quantity purchased, and when the order happened. On Shopify, it also matters how the discount showed up: a code, an automatic discount, a free shipping threshold, or an app-driven bundle that quietly changed the effective price paid. The system also tracks discount applications and shipping discounts at the line-item level because that is where Shopify pricing gets distorted when offers stack.

A product purchased during a quiet weekday behaves differently than the same product purchased in a high-intent weekend rush, and promotions change customer expectations in ways that outlive the campaign itself. The system preserves those conditions instead of flattening them.

It also tracks pricing history at the SKU level because products develop patterns over time. Some items behave like switches; small price moves trigger immediate volume changes. Others are strangely stable; buyers barely react. Quantity shifts, threshold effects, and long-tail demand responses are hard to see when you only look at blended conversion rates. The engine watches how demand responds across real transactions, and that is where elasticity starts to become visible.

Customer behavior adds another layer that most pricing discussions ignore. First-time buyers, repeat customers, and high-lifetime-value segments respond differently to the same price, even when they buy the same product. Purchase frequency, lifetime value, and past exposure to discounts shape sensitivity. A loyal customer might not care about a small increase, while a new buyer hesitates at the same number. Treating them as one audience is how pricing decisions get watered down.

Then there is basket context, the part that breaks most simplistic pricing logic. Price changes influence what else gets purchased, not just whether something converts. Attach rates, upsells, and cross-sells can rise or collapse depending on how entry-point products are priced. A small adjustment can lift total order value or quietly damage bundle economics. These effects do not show up when products are analyzed in isolation.

Finally, the system watches what happens before the order, the signals that show intent before revenue shows up: browsing patterns, abandoned carts, time-to-purchase after a price view, and how customers react when pricing shifts. Those moments reveal hesitation, urgency, and sensitivity long before the checkout confirms anything. When the system combines that pre-purchase behavior with segmentation by customer type, order size, and channel, pricing stops being a blurred average and becomes something you can actually isolate, reason about, and test.

Core Algorithms Behind the Pricing Intelligence Tool

This is where the system stops describing what happened and starts learning why it happened. Pricing teams get stuck in correlation; the price went up and conversion dipped, so the conclusion feels obvious. The engine is built to separate signal from noise, even when the business is changing week to week.

It starts with elasticity, but not as a single number. The system builds SKU-level elasticity curves and keeps them current as new data comes in. Some products react immediately to small moves. Others barely respond until a threshold is crossed. That behavior also changes by buyer type. A loyal customer and a first-time buyer can respond like they are in two different markets, so elasticity is modeled by segment, not averaged into something useless.

Demand is rarely linear, and the engine assumes it will behave oddly. It detects threshold effects, saturation points, and decay patterns where an initial lift fades as customers adapt. That stops teams from repeating tactics that only worked once or working the discount lever harder because it feels safe.

Pricing also lives inside trade-offs. A price increase can raise margin and still reduce profit if it changes order composition. A discount can lift conversion and still damage lifetime value if it trains customers to wait. The system models price, conversion, margin, and basket shifts together so decisions are tied to profit, not a single headline metric.

Confidence matters as much as performance, especially in noisy environments. Bayesian inference is used to reduce false positives, so the system does not declare a “winner” based on variance. It calculates how sure it is and how risky it would be to act on the result.

The engine also learns cross-SKU effects. Customers substitute, bundle, and anchor on prices in ways that break simple logic. Changing one item can shift demand to adjacent products or alter attach rates, and those interactions are learned directly from orders.

Time is treated as a variable, not a backdrop. Seasonality, promotional fatigue, and temporary demand spikes are accounted for so short-term behavior is not mistaken for permanent truth. The outcome is a pricing model that gets sharper with use and recommendations grounded in how your customers actually buy.

How AI Price Tests Are Designed and Executed

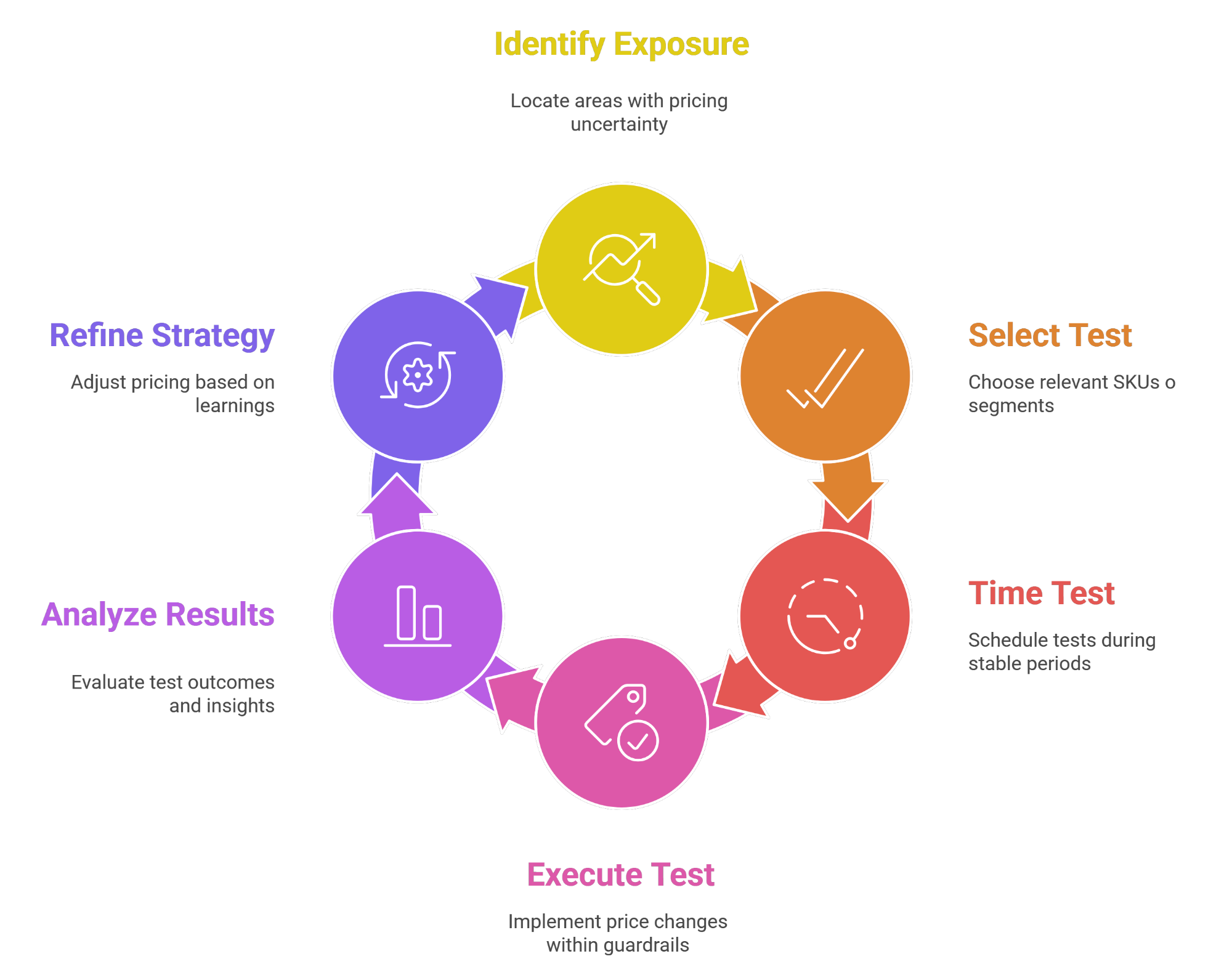

Price testing in a live store is not a lab exercise. If you treat it like one, you either scare the business with unnecessary swings or you run tests so small they teach you nothing. The system is built to sit in the middle, learn quickly, and stay safe.

Test selection starts with identifying where the business is exposed. Sometimes it is a high-margin SKU that has not been touched in years. Sometimes it is a product that sells well but depends too heavily on discounts. Sometimes it is a segment that looks healthy in the aggregate but shows hesitation once you separate first-time buyers from repeat customers. The goal is not to test everything. It is to test where uncertainty is expensive.

Timing is part of the design. Tests are avoided during noisy periods when results would be distorted, heavy promotions, inventory constraints, abnormal traffic spikes, or major merchandising changes. If the environment is unstable, the system waits. When a test runs, the price movement is sized to be noticeable without being disruptive, usually small single-digit moves first, unless historical variation shows customers have already tolerated wider swings.

Each test runs inside guardrails. Exposure is intentionally contained, scoped to a specific SKU, segment, or channel, so the rest of the business stays steady. Price movement has limits, and the test has an expiration date, both set by volume and confidence. If the early read turns ugly, conversion falls off a cliff, margin collapses, and baskets shrink in ways that do not recover, the change is rolled back before it leaves a mark.

Tests can run in parallel, but they are not allowed to step on each other. The engine tracks overlap and avoids combining changes that would make results impossible to interpret. Learning has to stay clean; otherwise, you end up with movement and no conclusions.

After each run, the result is not filed away as a one-off win or loss. It becomes a new piece of understanding. A successful test sharpens where price can move. A neutral test reduces guesswork. A failed test defines a boundary the business should not cross again. Over time, the system gets faster at spotting where price truly matters and where it is just noise, which is how pricing stops being a constant scramble and starts behaving like an intentional process.

Real Pricing Insights the System Produces

The point of testing is not movement. It is clarity. For each SKU or segment, the engine outputs a recommended test band and a risk cap on exposure. Then it produces a profit decomposition, showing what actually changed: unit volume, margin per unit, basket composition, discount substitution, and repeat behavior shifts. Once enough controlled experiments have run, the system starts surfacing patterns that most teams never see because they sit between dashboards and instincts.

One of the first things that shows up is underpricing. Certain products keep converting even as price increases slightly. Demand holds, basket behavior stays stable, and margin quietly improves. These SKUs are often priced low for historical reasons, not strategic ones, and they stay that way because no one wants to be responsible for raising price without proof. Testing removes that fear.

The opposite problem appears just as often. Some prices suppress demand in subtle ways. On the surface, conversion looks acceptable. Once you isolate segments, hesitation and abandonment start to show up at specific thresholds. A small correction in those cases does more for profit than another promotion ever could.

Another common insight is how little many discounts actually do. Broad promotions feel effective because revenue spikes, but testing often shows that demand would have arrived anyway. Margin gets sacrificed without changing behavior. In contrast, modest, targeted price adjustments sometimes outperform deep discounts by preserving value and trust.

Price testing also exposes how pricing shapes the order itself. Entry-point products pull customers into higher-margin combinations when they are priced correctly. Small shifts can change attach rates and bundle performance in ways that are invisible if you only look at SKU-level conversion. This is where average order value improves without forcing upsells.

What makes these insights useful is that they come with boundaries. The system does not just tell you what worked. It shows you where price stops working, which segments are sensitive, and where pushing harder would create more damage than upside. That turns pricing from a guessing exercise into something you can act on without second-guessing every move.

Advanced Features Beyond Basic Price Testing

Basic price testing tells you what happens when a price moves. The advanced layer explains why it happens and whether it will still hold next month. This is where pricing stops being SKU-by-SKU and starts reflecting how real customers behave across contexts.

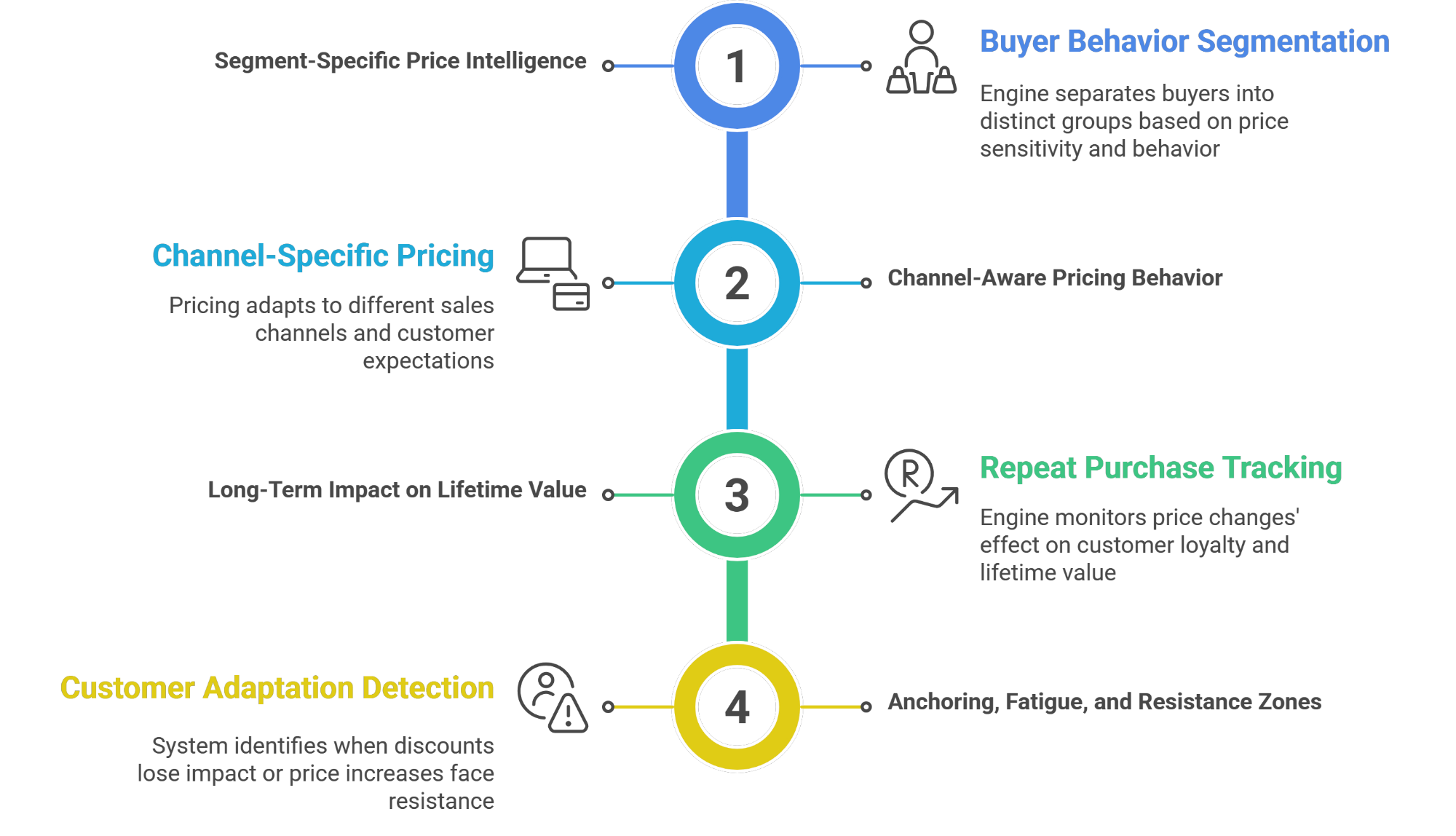

Segment-Specific Price Intelligence

Once pricing behavior becomes clear at a transactional level, the engine starts separating buyers who behave like different markets. New customers often read price as a risk signal. Returning customers care more about consistency and value. High-intent buyers tolerate thresholds that casual browsers will not. When those behaviors are split cleanly, pricing stops being blunt and starts becoming precise.

Channel-Aware Pricing Behavior

A price that works in organic traffic can fail in paid channels, not because the product changed, but because expectations did. Paid shoppers compare harder, bounce faster, and often behave with less patience. Treating every channel the same forces compromises that quietly hurt performance. The system keeps channel context intact so pricing can match how demand actually arrives.

Long-Term Impact on Lifetime Value

Short-term lifts are easy to celebrate and easy to regret. The engine tracks how price changes affect repeat behavior and lifetime value, especially when discounts start training customers to wait. Sometimes the “win” is not a lower price; it is stable pricing that resets expectations and protects margin over time.

Anchoring, Fatigue, and Resistance Zones

Customers adapt faster than most teams realize. A discount that once moved demand can lose impact as buyers learn the pattern. Price increases can also hit resistance zones where trust breaks and hesitation spikes. The system detects those zones early so the pricing strategy evolves before performance erodes.

What comes out of these layers is not complexity for its own sake. It is restraint. Fewer unnecessary discounts, clearer boundaries, and pricing decisions that feel calmer because they are grounded in accumulated evidence.

Results Observed Across Early Deployments

When pricing is treated as something you can measure and improve, results tend to show up without drama. There is rarely a single big moment. What changes is the baseline. Profit per order improves, discount dependence fades, and pricing decisions stop feeling like a gamble.

Across early pilots, the most consistent outcome is not a dramatic overnight win, it is a quieter improvement in baseline economics: fewer unnecessary discounts, clearer price boundaries, and margin lift that does not depend on finding new traffic. In many cases, the first gains come from simple corrections: products that were underpriced for no good reason, discounts that were running out of habit, segments that were being overcharged without anyone noticing. Once those are surfaced, the business stops leaking margin in small, invisible ways.

One of the more telling patterns in pilots is how often conversion stays stable enough during well-chosen price increases. Teams expect a drop because they have been trained to fear raising prices. When tests are scoped properly and chosen based on real elasticity, price can move up with only a minor change in conversion, and the margin improvement more than makes up the difference. That is usually the moment pricing stops being emotional inside the company.

Discounting also becomes less reactive. When the system shows which promotions do not materially change demand, teams stop using discounts as a default response to softness. Revenue becomes less volatile because fewer artificial spikes are introduced, and customers stop being trained to wait for the next deal.

Average order value often improves as a side effect. When entry-point products are priced correctly, basket behavior changes. Customers flow into higher-margin combinations more naturally, and attach rates improve without forcing aggressive upsells.

Speed is another benefit that is easy to underestimate. Pricing mistakes surface early. Negative signals show up fast, and tests can be rolled back before they leave a lasting dent. That shortens feedback loops and lowers the cost of being wrong, which makes teams more willing to learn instead of freezing.

The practical outcome is stability. Less scrambling, fewer gut decisions, and a pricing process that gets sharper the longer it runs.

Why This System Requires Deep Integration

The pricing intelligence tool only works when it is anchored in reality. Surface-level data can tell you what happened, but it cannot explain why it happened or what will happen if you change something. That is the gap this system is built to close, and it only closes with deep, order-level access.

Most pricing tools operate on partial views. They look at traffic, conversion rates, or competitor prices in isolation and try to infer behavior from the outside. What they miss is context. Without seeing full orders, you cannot understand basket effects. Without customer history, you cannot separate loyalty from price sensitivity. Without timing and sequence, you cannot tell whether demand shifted because of price or because the environment changed. Shallow inputs lead to confident conclusions that do not survive contact with reality.

The integration itself is deliberately conservative. Read-only API access is enough. On Shopify, that means pulling order, customer, product, and discount data, then reconciling the effective price customers actually paid, including discounts, shipping incentives, and offer mechanics introduced by apps. If you run Shopify Plus, we also account for Markets, B2B catalogs, and customer-specific pricing rules that shape price perception across segments. The system does not need to push prices live or interfere with operations to learn. It observes how customers behave, models responses, and produces recommendations. Action stays in the hands of the business. That keeps risk low while preserving data fidelity.

Depth also builds trust. When every recommendation can be traced back to real orders and identifiable segments, pricing stops feeling like a black box. Teams can see where the insight came from and why it applies. That transparency matters, especially when pricing decisions carry emotional and financial weight.

Confidentiality is treated as a baseline requirement. Data is isolated by merchant. Analysis runs within that boundary. Outputs belong to the business generating them. Nothing is reused, benchmarked, or shared externally. The system learns within the environment it is given and nowhere else. Without this level of integration, pricing tools speculate. With it, they learn.

How Businesses Use the Output in Practice

The output of the pricing engine is not a set of instructions to follow blindly. It becomes a shared source of truth that changes how pricing decisions get made inside the business.

For Shopify Plus teams, it often starts as cleanup across Markets and customer segments: tightening price floors for low-sensitivity cohorts, reducing blanket discounting in paid channels, and setting clearer boundaries for when a code should exist at all. The result is usually fewer promotions, not more, because the business finally knows which levers work.

From there, teams use the insights to make deliberate adjustments instead of reactive moves. Prices change with a clear understanding of which products can absorb increases, which segments need protection, and where small shifts create outsized impact. Conversations that used to revolve around opinions become shorter and more focused because the trade-offs are already visible.

Over time, the learning shows up in planning, not just day-to-day tweaks. Merchandising decisions are shaped by how products behave at different price points, not just by revenue or sell-through. Promotions are designed with a clearer sense of what will actually change demand and what will simply erode margin. Pricing becomes part of strategy instead of a last-minute response.

The system also helps align pricing with customer value. Instead of treating all buyers the same, teams see how different customers respond to different signals. Loyal customers are protected from unnecessary friction. Price-sensitive segments are handled intentionally rather than punished by blunt discounts. This improves both conversion quality and long-term relationships.

Most importantly, pricing becomes repeatable. Tests follow a consistent process. Outcomes are recorded and learned from. Decisions do not disappear into spreadsheets or institutional memory. Over time, pricing stops being a source of internal debate and starts functioning like an optimization process that improves simply by being used.

Why This AI Price Testing Engine Is Hard to Replicate

On the surface, many pricing tools look similar, especially competitive pricing tools built around dashboards, alerts, and automation. Dashboards, alerts, rules, and automation are easy to ship. What is not easy to copy is a system that learns from real commerce behavior, improves with every test, and produces decisions that hold up outside a slide deck.

This engine is shaped by live order data and continuous experimentation, not static models or theoretical assumptions. Elasticity is learned, not declared. Confidence is earned through repeated exposure to real customer behavior. The system gets better because it runs inside operating businesses where price decisions have consequences, not because it was configured once and left alone.

The difficulty compounds when pricing moves across multiple dimensions at the same time. Products interact. Segments respond differently. Channels behave inconsistently. Time distorts signals. Running controlled experiments without contaminating results, while still learning fast enough to matter, requires discipline and infrastructure that rule-based systems cannot support. Pricing behavior is not stable, and tools that assume it is eventually break.

Dashboards fail for a different reason. They explain what happened, but they do not tell you what to do next. Knowing that conversion dipped does not explain whether price caused it, whether the dip will persist, or whether changing price again would make things better or worse. Decision intelligence lives in that gap.

That is why pricing cannot remain a static decision. In modern e-commerce, it has to be measured, tested, and improved the same way conversion and retention are improved. AI price testing makes that possible by turning pricing into a learning loop. Small tests, real behavior, growing confidence, and decisions grounded in evidence instead of instinct.

If you want to test-drive this AI price testing system on your own data, contact us.