Shopify Works—Until It Doesn’t

If you’re running a high-volume ecommerce business in the industrial sector on Shopify Plus, there’s a moment, quiet but unmistakable, when things start to bend. Orders slow. Inventory updates lag. Pricing overrides stall halfway through. Your ERP throws an error, and your ops team starts noticing that not everything is syncing the way it should. At first, it feels like a glitch. Then it becomes a pattern.

This isn’t Shopify failing. It’s Shopify doing exactly what it was built to do: protect the platform from overload. But if your sync architecture can’t keep up, you’re not just risking delays, you’re jeopardizing online sales that depend on accurate pricing, stock, and fulfillment signals.

The real issue? Most industrial businesses don’t outgrow Shopify’s API on day one. They drift into it. First, it’s a daily sync job that works perfectly, until your SKU count triples. Then it’s a pricing update that pushes cleanly—until customer-specific pricing logic kicks in. The ceiling doesn’t crash down. It rises until you collide with it.

But here’s the truth most mid-market teams miss: Shopify isn’t the bottleneck. Your architecture is. And if you’re treating Shopify Plus like a server you can flood with calls, you’re going to break the very system you rely on to scale.

This guide isn’t for stores selling t-shirts out of a warehouse. It’s for complex operations syncing tens of thousands of SKUs across multiple software systems—ERP, WMS, PIM, CRMs—with a mix of pricing logic, availability thresholds, and custom workflows that touch every corner of your stack.

We’re going to show you how to rethink your system design from the ground up. Not to fight Shopify’s API limits, but to flow with them and to move smarter.

The Hidden Cost of API Saturation in Industrial Ecommerce

The API ceiling doesn’t always announce itself with flashing lights or urgent failures. Sometimes, it slips in quietly. Your inventory updates start missing a few SKUs. A pricing sync job finishes, but half the changes never make it to the web page buyers see. Your ERP confirms an order, but Shopify’s backend never reflects it. The system runs, but not fully. And that “almost synced” reality is where trust begins to unravel.

In industrial ecommerce, where buyers don’t just want accuracy, they depend on it, small API mismatches turn into major operational liabilities. A $12,000 turbine listed as “in stock” when your warehouse is actually on backorder doesn’t just trigger a refund. It sparks phone calls, apologies, escalations, and, eventually, loss of confidence. For B2B buyers working with long lead times, custom specs, and negotiated pricing, these aren’t just glitches. They’re violations of expectation.

The real-world cost of hitting Shopify’s API limits isn’t just a 429 error code or a failed sync job. It’s the invisible erosion of your credibility. When a pricing update fails midway through a quote cycle and two buyers get two different numbers for the same part, your brand takes the hit. When inventory on a product shifts but doesn’t reflect in time on the frontend, your fulfillment team scrambles to undo what the system didn’t prevent. And when sync jobs quietly collapse without alerting anyone, you don’t just lose efficiency—you lose trust, both internally and externally.

The most dangerous part? None of this feels like a catastrophic failure. It’s not a system going down. It’s a system falling slightly out of sync, over and over again, until the people who depend on it stop believing in it.

Shopify didn’t cause this. It’s doing exactly what it was built to do: enforce order, stability, and fairness across a platform serving millions of merchants. What caused the fragility is the assumption that you could scale without architectural discipline. That’s the way you integrate at 10,000 SKUs, would hold up at 100,000. That syncing everything all at once would always be fine.

But Shopify punishes inefficiency relentlessly. And in high-volume environments, efficiency isn’t about developer speed. It’s about architectural restraint.

Why Shopify’s Limits Are Not the Problem—Your Architecture Might Be

It’s easy to blame Shopify when your data starts falling out of sync. After all, it’s the last system in the chain, the one that displays the wrong inventory, shows the wrong price, or delays an order confirmation. But the truth is, Shopify’s API limits are rarely the root problem. More often, they expose flaws in how your backend and core business processes were built to scale.

Shopify Plus isn’t built to give you unlimited throughput. It’s built to give you predictable, controlled access to shared infrastructure. That means every request—whether REST or GraphQL—must fit inside a set of rate and cost boundaries. Shopify’s job is to ensure that no single store, even a $100M industrial brand, can hog resources and affect performance for the entire ecosystem.

Most ecommerce platforms don’t give you this much clarity about your usage. But they also don’t care what you send, how often, or whether it breaks your downstream systems. Shopify does. Its limits are hard-coded reminders to build like an engineer, not just a developer.

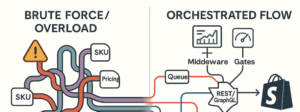

The challenge isn’t the limit itself—it’s the lack of architecture behind the scenes. Many industrial brands still approach data processing like they did a decade ago: nightly batch jobs pushing every product to Shopify, regardless of whether anything changed. Price updates that resync entire categories instead of just deltas. Inventory feeds that hit every SKU, even if only five need updating. That brute-force mentality works when you’re small. At scale, it’s reckless.

If you’re syncing live inventory from five warehouses, calculating pricing per customer tier based on contract terms, and pushing thousands of changes per day, you’re not just “running a store.” You’re managing a real-time transactional data system on top of a platform with strict throughput policies. And every lazy assumption, unnecessary query, every duplicated job, every update without a change, becomes another crack in your foundation.

The fix isn’t asking Shopify for higher limits. It’s designing your system so well that you never need them.

Understanding the Rules (and the API Documentation) Behind Modern Web APIs

To design a system that flows instead of fails, you need to understand the rules of the platform you’re building on. Shopify Plus doesn’t make it hard—they publish their API limits clearly. The mistake most teams make is treating those numbers as rough guidelines rather than hard constraints that shape how every sync should behave.

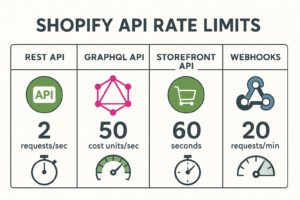

Let’s break down the structure.

Shopify’s REST Admin API allows four requests per second per app per store. You’re given a burst pool of 40 requests that replenish gradually over time. It’s a simple, linear throttle—fast to implement, but easy to overwhelm. This works fine for lightweight operations. But once you’re syncing 20,000 SKUs from an ERP or processing real-time price changes, you hit the cap fast and silently.

Then there’s GraphQL, which uses a cost-based model. Each request has a cost based on its complexity: how much data you’re asking for, how deeply it’s nested, and how many fields are returned. You’re allowed 1,000 cost units per minute, with bursts up to 2,000. This sounds like a lot until a single product query, complete with variants, inventory levels, and metafields, burns through 80 or 100 units. Ten of those, and your entire API budget is spent for the minute.

Add in the Storefront API (designed for public-facing site interactions, not backend processing) and Webhooks (which are throttled to 100 deliveries per second), and you start to see the full picture: Shopify is generous, but precise. You can do almost anything—if you do it efficiently.

Here’s a quick overview to anchor your thinking:

| API Type | Limit Type | Default Limit | Notes |

| Admin REST API | Requests per second | 4 requests/sec per app per store | Burst pool of 40; simple linear throttle |

| Admin GraphQL API | Cost-based (units/minute) | 1,000 cost units/min (2,000 burstable) | Query cost varies by depth and fields; needs budgeting logic |

| Storefront API | Requests per second | 60 requests/sec | Designed for customer-facing queries, not backend syncs |

| Webhooks | Throughput queue | 100 deliveries/sec | Under load, Shopify queues and delays excess events |

The key insight here isn’t the numbers, it’s how you work with them. Shopify’s API architecture rewards those who budget their calls like currency. It punishes those who treat it like a firehose.

If your system assumes infinite bandwidth, it will break. If it assumes constraint, it will scale.

And that’s the difference between a system that survives growth and one that collapses under its own traffic.

What Breaks at Scale—From Inventory to Customer Data Integrity

When Shopify Plus API limits are exceeded, the system doesn’t explode—it erodes. What fails isn’t the request itself, but the assumption that every process will finish cleanly. You see it in sync jobs that complete without updating all records. In pricing updates that skip a handful of SKUs without warning. In order to ensure status pushes that silently time out and never reach the ERP. These failures don’t throw errors. They accumulate quietly until your operations team starts losing confidence in the system, and your customers start feeling the effects.

The most common breakdown? Inventory mismatches. A product looks available on the frontend, but the ERP hasn’t been able to sync changes fast enough due to rate throttling. A buyer clicks “Add to Cart,” gets a shipping ETA, and even receives an order confirmation, only for your warehouse to flag a backorder. That’s not just a missed sync. That’s a broken promise.

Then there’s pricing. In B2B industrial environments, where customer-specific pricing is the norm, syncing pricing logic from ERP to Shopify is delicate. It often involves metafields, customer tags, custom scripts, or dynamic pricing apps. When an API call fails halfway through a tiered update, some buyers see the new rate, others see the old one. Your sales team scrambles, your support team takes the heat, and your margin disappears under confusion and miscommunication.

Even order status can become a source of chaos. If your fulfillment system relies on Shopify status updates to ship or invoice correctly, and those updates get throttled or lost, you’re looking at fulfillment delays, billing mismatches, and SLA breaches that erode trust at the enterprise level.

The most dangerous failures are the silent ones. Because Shopify doesn’t alert you when you’ve been throttled. There’s no email, no blinking light. You have to design your architecture to listen for those signals. And if you don’t? You’ll find out about the failure when a customer calls. Or worse—when they don’t call, and just leave.

The root cause isn’t usually bad code. It’s the system architecture that didn’t respect the platform’s rules. That treated every sync as equally important. That assumed “try again later” would fix things.

At scale, retrying blindly isn’t resilience. It’s a liability. And every unnecessary call you make brings you closer to the edge of your API budget, leaving you with fewer resources when the critical events hit.

Architecting for Constraint — Build a System That Thinks in Flow

If your backend system treats Shopify like a bottomless pit it can dump data into, you’re going to run into problems. Industrial-scale operations don’t need brute force. They need orchestration.

True scalability means designing a system that flows, not floods. And that starts with one key principle: every API call is a finite resource.

1. Define What Actually Matters

Start by auditing your integrations. Which tasks drive revenue, trust, or operational continuity? A few examples:

- Inventory updates by warehouse

- Contract pricing logic

- Order status syncs

- Tier-based customer tags

- Metafield updates for compliance

- Spec sheets or documentation links

You’ll quickly realize not all data is created equal. Updating a product description is not as urgent as making sure a $12,000 pump shows up as in-stock. Knowing that helps you prioritize.

2. Switch to GraphQL Where It Makes Sense

Shopify’s REST API gives you 4 calls per second, per app, per store, with a burst pool of 40. GraphQL operates on a cost model: 1,000 units per minute, up to 2,000 with bursts. That means you can structure one smart GraphQL query to fetch or push more data than 10 REST calls combined—if you’re strategic.

But GraphQL isn’t a magic bullet. It needs design. Shopify even gives you a cost calculator to estimate your usage before running a query. Use it. Balance cost. Think like you’re budgeting API throughput, because you are.

3. Build Middleware That Knows How to Wait

You will get throttled. That’s not failure. What matters is how your system responds.

Your middleware layer needs:

- Queued execution: Break large jobs into timed batches.

- Backoff logic: Delay retries exponentially after each 429.

- Retry limits + alerts: Don’t retry forever—know when to pause and alert.

- Priority tags: Orders and inventory first. Spec sheet updates last.

Good middleware doesn’t panic. It adapts. And it keeps your ops team from being caught off guard at 2 a.m.

4. Segment by App and Workflow

Shopify’s API limits apply per app. That means you can break workloads into isolated streams:

- Separate apps for ERP vs WMS sync

- Separate API keys for daily vs real-time jobs

- GraphQL for bulk reads, REST for quick writes

This allows you to operate parallel workflows without one hogging resources from the other. It’s not a workaround. It’s smart architecture.

5. Monitor Like You’re Running a Bank

If your API calls fail and nobody notices, you’re not running a store; you’re gambling with your ops. Monitor rate limits. Track retry queues. Set alerts on 429 spikes. Build dashboards that show:

| Metric | What It Tells You |

| Rate Limit Usage | How close you are to throttling |

| Retry Backlog Size | Whether sync jobs are getting bottlenecked |

| Error Rate by Job Type | Where your integrations are failing |

| Job Time-to-Completion | How long data takes to appear on frontend |

In a high-throughput industrial environment, visibility is resilience.

Sync What Matters, When It Matters — Queue, Prioritize, Segment

Shopify doesn’t care how important your data is to you. It enforces limits equally. That’s why the smartest operations don’t treat all sync jobs the same—they triage, segment, and prioritize based on impact.

Not All Data Is Created Equal

Imagine this scenario: at the same time, your warehouse is updating inventory across 40,000 SKUs, your merchandising team triggers a bulk metafield update for holiday badges. Meanwhile, a contract pricing update is trying to go live for a VIP buyer.

Without sync segmentation, these jobs all fight for the same pool of API calls. Someone loses. Often, it’s the process that matters most.

To avoid this, you need a system that understands context and criticality.

Tier Your Sync Jobs by Business Impact

Here’s how leading industrial brands structure their sync architecture:

| Data Type | Priority | Frequency | Sync Method |

| Inventory by Location | High | Real-Time | API + Retry Queue |

| Order Status | High | Real-Time | Webhooks + Fallback |

| Contract Price Updates | Medium | Daily / On-Demand | Scheduled Jobs |

| Product Descriptions | Low | Weekly | Background Queue |

| Spec Sheets / Docs | Low | As Needed | Manual/API Hybrid |

This segmentation turns sync logic into a business function. It aligns technical behavior with operational value.

Smart Queue Logic: Don’t Let Metadata Block Revenue

Once priorities are clear, your queue logic should reflect them.

If pricing updates are queued behind a flood of product tag changes, you’re wasting valuable bandwidth. Queue logic should:

- Assign priority to each job type

- Allocate retry attempts based on criticality

- Pause or delay lower-priority jobs when load is high

- Trigger alerts only for mission-critical failure, not every missed metafield

Even better? Queues let you absorb API shocks without losing data. During peak load, you don’t stop syncing—you control the flow, like a smart dam during a flood.

Queue-Based Thinking Isn’t Optional—It’s Scalable Infrastructure

Whether you’re using RabbitMQ, Bull, or AWS SQS, queuing gives your stack elasticity. It ensures that:

- REST calls never exceed burst pools.

- GraphQL queries stay under cost caps.

- Critical updates are never blocked by cosmetic ones.

And when volumes spike—say during a trade event or seasonal reorder cycle—your system flexes. Not fails.

Monitoring, Throttling, and Recovery—How to Build a System That Self-Heals

Once your Shopify Plus architecture begins handling tens of thousands of SKU updates, pricing changes, and ERP syncs, the biggest risk is silence. That is, sync jobs that appear to run but quietly fail in the background. And the consequences are rarely immediate. They show up days later when a quote goes out with the wrong price, a customer sees out-of-date stock, or your fulfillment system ships the wrong part.

Shopify doesn’t send you a friendly warning before you exceed API limits. It just starts responding with 429 Too Many Requests. If your system isn’t watching for it, you won’t know until the error has already affected customers.

Monitor the Metrics That Matter

Your devs may be tracking total API call volume, but that’s not enough. What matters is meaningful monitoring: visibility into how critical syncs behave under real load. A resilient system tracks:

- Rate limit consumption across endpoints

- Retry queue backlogs and aging jobs

- GraphQL cost usage by query

- Error rates by type (especially 429s and failed webhooks)

- Time-to-process per data batch

And it does so continuously, not just during incidents.

Whether you’re using Datadog, New Relic, Prometheus, or a custom dashboard, your visibility should extend across every sync pipeline. Because at scale, a delayed update isn’t a technical blip—it’s a sales loss.

Throttle Proactively, Not Reactively

Once you can see the signals, the next step is throttling with intent.

If your system notices GraphQL usage climbing toward the cost ceiling, it shouldn’t wait for errors. It should start pausing non-urgent jobs, rescheduling background syncs, or batching smaller payloads. The same applies to REST: if you’re approaching burst limits, it’s better to queue intelligently than crash through the ceiling.

This logic turns a blunt system into an adaptive one—something that flows with Shopify’s guardrails instead of slamming against them.

Recovery Logic That Builds Trust

When failure happens, and it will, how you recover defines your reliability.

A basic system retries failed jobs. A great system retries intelligently:

- It isolates only the failed records, not the entire batch.

- It adds exponential backoff logic (wait 30s, then 60s, then 2min).

- It alerts ops if failures persist beyond a set threshold.

- It logs every retry with timestamp, context, and status.

Even more important? It maintains continuity for the user. If an ERP-triggered update stalls, the system should either fall back to cached data or show a soft warning, never expose an empty page or outdated inventory.

This is how you earn trust. Not with promises of uptime, but with architecture that plans for failure and builds gracefully back to integrity.

Advanced Caching & Delta Sync Logic

In high-volume environments, brute force is a liability. The smartest way to scale is to make fewer calls, by caching what doesn’t change and syncing only what does.

Most inefficiencies come from redundant requests. Systems re-fetch product data that hasn’t changed in months or poll for inventory on static SKUs. Instead, segment your data by volatility:

- Cache stable specs, metafields, and documents with long TTLs.

- Use real-time polling or webhook-triggered updates for volatile data like inventory or order status.

- Apply delta logic: only sync SKUs whose updated_at timestamps—or content hashes—have changed.

This means fewer wasted calls, faster sync times, and a system that behaves more like an orchestration engine than a brute-force script.

Queueing, Scheduling & Sync Intelligence

At scale, sequencing matters more than speed. Shopify’s API limits aren’t meant to be broken through; they’re meant to be worked with. That’s why queueing is essential. When thousands of updates are triggered—say, 50,000 inventory or pricing changes—the smart move isn’t to push them all at once. It’s to stagger them into batches that align with Shopify’s rate limits.

A resilient queueing system transforms potential failure points into manageable workflows. Instead of creating an avalanche of requests that overwhelms the API, queues regulate flow, retry failed jobs with built-in logic, and prioritize the most critical updates first. For example, a change to inventory status or customer pricing gets handled immediately, while metafield or SEO copy updates are scheduled further down the pipeline.

When you layer in dynamic scheduling, your syncs no longer operate on a fixed clock. They respond to business signals: a new shipment arriving, a stockout threshold being triggered, a spike in order volume for a specific region. These real-world events prompt real-time updates, allowing your middleware to act with precision rather than brute repetition.

In this architecture, nothing is left to chance. Your systems aren’t guessing when to sync—they’re listening, reacting, and sequencing updates in harmony with the platform’s rules. It’s not just a performance win. It’s an operational advantage.

Shopify Plus API Scaling Checklist for Industrial Brands

Scaling high-volume operations on Shopify Plus doesn’t just depend on raw speed or developer muscle. It depends on how smart your architecture is—and whether your sync, caching, and monitoring systems are built to respect the platform’s boundaries without sacrificing operational performance.

Here’s a practical toolkit to evaluate and upgrade your infrastructure:

Sync Logic Checklist

| Question | Goal | Your Status |

| Are you using delta logic for inventory, pricing, and product updates? | Reduce unnecessary API calls by syncing only what’s changed | ☐ Yes ☐ No |

| Do you rely on full syncs for data that rarely changes (e.g., metafields)? | Prevent bloated jobs and avoid wasteful polling | ☐ Yes ☐ No |

| Have you classified syncs by urgency (e.g., critical vs. cosmetic)? | Prioritize data that affects revenue or trust | ☐ Yes ☐ No |

| Are you coordinating syncs across multiple APIs (ERP, WMS, PIM, pricing)? | Avoid conflicting updates and ensure sequence integrity | ☐ Yes ☐ No |

Smart Queue Architecture

| Queue Element | Purpose | Implemented? |

| Batch queueing for high-volume updates | Prevents throttling by spacing requests | ☐ Yes ☐ No |

| Retry logic with exponential backoff | Recovers gracefully from 429 errors | ☐ Yes ☐ No |

| Priority handling by data type | Ensures inventory and pricing are never delayed | ☐ Yes ☐ No |

| Alerting on stuck or failed jobs | Surfaces issues before they affect buyers | ☐ Yes ☐ No |

Data Segmentation Table

Not all sync data is created equal. Use the table below to classify your jobs by urgency and assign the correct processing logic.

| Data Type | Priority | Sync Frequency | Sync Method |

| Inventory availability | High | Real-time or hourly | Queue + Retry + API |

| Order status updates | High | Event-based | Webhook + API |

| Pricing tiers | Medium | Daily | Scheduled job with delta detection |

| Product descriptions | Low | Weekly | Batched sync or CMS-driven |

| Spec sheets & documents | Low | As needed | Manual trigger or low-priority batch |

Monitoring & Observability Metrics

| Metric | Why It Matters | Tool/Method |

| Rate limit usage per app/store | Prevents silent throttling | Shopify headers, Datadog |

| Queue backlog depth & job lag | Detects system congestion | Redis UI, Bull dashboard |

| Error rate by endpoint (429s, 5xx) | Indicates retry needs or design flaws | API logs, monitoring agent |

| Time-to-sync from ERP to Shopify | Measures operational responsiveness | Custom dashboard, timestamp diff |

| Webhook delivery failures | Protects from sync gaps | Shopify logs + retry queue |

Pre-Failure Alert Triggers (Set These!)

| Alert Condition | Why You Need It |

| GraphQL usage > 80% of quota in a 1-minute window | Prevent burst failures |

| More than 5 failed 429 calls in a row | Trigger pause on low-priority jobs |

| Queue lag exceeds 60 seconds | Indicates processing backlog |

| Missed sync for high-priority job > 5 minutes | Signals risk to trust or revenue |

All alerts should route to Slack, Opsgenie, or PagerDuty with job metadata attached.

This isn’t just a checklist. It’s a mirror. If you can’t tick most of these boxes with confidence, your infrastructure isn’t truly ready for high-throughput B2B commerce. But the good news? Each improvement compounds. Every smart queue, every alert rule, every delta-based job gives you more breathing room—until your platform limits stop feeling like a ceiling.

Outpace the Limits, Don’t Fight Them

Shopify Plus isn’t broken. It’s just honest. It tells you how much you can push—and punishes you when you ignore the rules.

If you’re running a high-volume industrial operation, you can’t afford brute force. You can’t rely on syncs that “usually” work, or middleware that fails silently under pressure. Your buyers expect reliability, your ops team needs clarity, and your business can’t scale on a backend built on guesswork.

The real competitive edge isn’t more bandwidth. It’s better architecture.

When you design for constraint, you win more than uptime. You win trust. You win accuracy. You win time. Because in B2B ecommerce, the brand that delivers the right stock, price, and order signal first wins the PO.

If you are ready for action, contact us. We’ll show you where the cracks are—and how to fix them before they break anything else.