How to Manage B2B Subscriptions Across Multiple Locations Without Breaking Your System

For B2B suppliers serving large, multi-location businesses, the challenge isn’t how to bill a customer every month. That’s trivial. The real problem is how to manage paid subscriptions and recurring orders across multiple facilities—each with different product needs, delivery schedules, and operational constraints.

Enterprise buyers don’t think in terms of “subscriptions.” They think in terms of supply continuity.

That means:

- Facility A wants nitrile gloves every two weeks

- Facility B needs restocks of degreaser every 30 days

- Facility C is on a custom 45-day schedule for filters and replacement parts

All of those sites fall under the same umbrella customer account. But each one operates semi-independently. They have different inventory levels, on-site storage limits, safety protocols, and procurement cadences. Forcing them into a single subscription rule set guarantees friction—both for the buyer and for your ops team.

The finance team might insist on centralized invoicing, but the line-level delivery details still matter. If the wrong item shows up at the wrong location on the wrong date, it doesn’t matter that the billing was accurate. The buyer experience breaks, and the worst part is your platform becomes a liability, not a value-add.

Most ecommerce platforms—BigCommerce included—aren’t built to handle this out of the box. Subscription logic assumes:

- One account = one delivery address

- One subscription = one recurring rule

- One set of products = global to the account

That works for consumer coffee subscriptions. It fails completely when a distributor is supplying cleaning chemicals to 17 healthcare facilities, each with their own compliance calendar.

And that’s where most implementations go wrong. They focus on recurring billing instead of location-specific fulfillment. They ask “how do we charge the customer every month?” instead of “how do we support facility-level workflows across their entire footprint?”

The right architecture is about building a location-aware system that respects the complexity of modern B2B procurement and then automating that complexity so neither party has to manage it manually.

Why BigCommerce Isn’t Built for Multi-Location Subscription Logic

BigCommerce does not natively support the kind of location-aware subscription logic that B2B suppliers need. Its architecture assumes each customer account represents a single purchasing entity with a uniform set of customer preferences, ignoring the reality of location-level variation. That might work for small-business buyers. But it completely breaks down when the buyer is a national facilities manager coordinating restocks across 30 locations.

There is no default concept of sub-accounts or “child” locations under a parent customer. There is no way to configure variant frequencies tied to site-specific inventory cycles. And there is no visibility into what each location is subscribed to—much less control over subscription management at the facility level.

The third-party apps available in BigCommerce’s ecosystem inherit these same limitations. Subscription tools are typically built for B2C and lightly adapted for basic B2B workflows. They allow a customer to set up recurring orders, manage frequency, and modify contents. But they treat that customer as a single destination, not a complex enterprise with multiple fulfillment endpoints.

In practice, this forces suppliers to choose between two unsatisfactory options: either simplify the subscription model into a universal rule set or manually divide each facility into its own customer account. The former leads to order confusion and fulfillment errors. The latter fragments data, increases administrative burden, and frustrates buyers who want centralized billing and oversight.

Yet BigCommerce is not a closed system. It can be extended to expand its capabilities, especially when implemented as headless BigCommerce, where you treat it as infrastructure, not just a storefront. The platform offers enough flexibility through its APIs, customer grouping logic, and metafields to simulate location-aware behavior. The challenge is in architecting that simulation in a way that operations teams can maintain and procurement teams can actually use.

What you’re building isn’t a subscription tool. It’s a custom procurement engine that happens to live on top of an e-commerce platform. And it only works if the system understands that “customer” means an organization, not a single location.

You’re Not Selling Subscriptions—You’re Powering Enterprise Business Operations

The term “subscription” is misleading in B2B. It suggests a consumer model: recurring billing, fixed products, maybe a loyalty perk. But that’s not what your enterprise customers are trying to solve. They’re not interested in passive reordering; they’re managing supply chains, compliance protocols, and on-site logistics across dozens of independent facilities.

What they need isn’t subscription infrastructure. They need a supply management system that can execute at the location level while reporting at the enterprise level.

Here’s what that actually looks like:

A buyer logs in and sees a centralized account—one billing relationship, one contract, and one admin interface. From there, they manage multiple facilities. Each facility is effectively a mini-account, with its delivery address, its contact person, and its reorder cadence. Products differ by site. Quantities shift over time. The frequency of delivery isn’t global; it reflects how each facility consumes the product on the ground.

And critically, the buyer wants to make adjustments without talking to a rep. If Plant B is ramping production and needs more degreasers, they want to modify that line item’s frequency without disrupting the subscription rules for Plants A or C. They don’t want to delete the entire subscription. They prefer not to initiate a new subscription from the beginning. They want control—scoped to the right level of responsibility.

Meanwhile, your internal systems still need to behave as if every client is one customer. Finance wants a single invoice. Logistics wants site-specific pick lists. Sales wants to know which facility is increasing usage. And compliance wants records that show exactly what was delivered where and when.

That’s the real operating model you’re solving for. It’s not about turning on recurring orders. It’s about building a location-aware framework for fulfillment and billing that mirrors the structure of your customers’ operations.

Most platforms, including BigCommerce, don’t offer this structure by default. That doesn’t mean you can’t build it. It means you have to stop looking for app settings and start designing systems.

How to Architect a Location-Specific Subscription System in BigCommerce

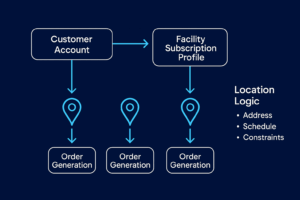

Model the Customer as a Parent Account with Structured Location Data

BigCommerce assumes one account = one buyer. You need to flip that. Treat the customer record as the enterprise parent. Use metafields or external identifiers to define each child location under that account: name, address, contact, and delivery rules. Sync such information from your ERP or build it directly into BigCommerce using custom fields.

Create Multiple Subscription Profiles Within a Single Customer

Your subscription engine needs to support independent streams tied to the same parent account. Each facility gets its own configuration, product mix, frequency, and destination. If your app can’t handle that through the API, it won’t work for B2B. Build around a subscription model, not just a billing loop.

Inject Fulfillment Logic Based on Location Metadata

BigCommerce can’t route orders differently on its own. Simulate that behavior by tagging each order with its originating location. Then pass those tags downstream to your fulfillment tools. If your 3PL or WMS needs a translation layer, use middleware to interpret and route orders based on those location tags.

Build a Location-Aware Interface for Buyers

Your buyer doesn’t want one giant subscription view. They want to see what Plant A is getting, modify what Plant B is receiving, and pause Plant C without touching anything else. That level of transparency and control drives stronger customer engagement, especially in complex B2B workflows. BigCommerce doesn’t support that natively, so you’ll need to build a portal or dashboard using the Storefront API that segments subscriptions by facility.

Maintain a Full Change Log for Procurement and Compliance

Location-level subscriptions can’t be ephemeral. Every adjustment needs traceability: who changed it, what was modified, and when it will take effect. That audit trail isn’t a “nice to have”; it’s required for enterprise buyers working under regulatory, safety, or financial constraints.

Structuring Location Data in BigCommerce the Right Way

The core limitation of BigCommerce is its lack of native support for sub-accounts or organizational hierarchies. If you’re selling into multi-location enterprises, that limitation becomes a major blocker unless you build your own structure around it. The first step in that process is designing how location data lives inside the customer record, not as decoration, but as a primary operational driver.

Modeling Facility-Level Data Inside a Parent Account

BigCommerce allows the use of customer metafields, structured data attached to individual customer records. This is the mechanism you’ll use to embed location logic. Think of each customer account as a shell for an enterprise organization. Inside that shell, you’re storing a list of operational locations, each with its own rules and identifiers.

Each facility should be defined by a consistent object structure: a unique internal ID, a clear location name, a full shipping address, a primary contact (including email and phone), preferred delivery frequency, and any special instructions or constraints. This isn’t just for internal use. The subscription logic, fulfillment system, and customer-facing interface will all read from this data. It has to be structured, reliable, and extensible.

If this data already exists in your ERP or CRM, treat BigCommerce as a mirror — not the master record. You’ll need either scheduled sync jobs or real-time API integrations to keep both sides in alignment.

Enforcing Structure Through Schema Discipline

Once the object shape for each location is defined, you must enforce consistency. That means defining a schema and sticking to it. Every location object should contain the same fields, in the same formats, with known required and optional parameters. You should not rely on manual convention for this. The schema should live in shared documentation and, ideally, in your middleware logic as a validation layer.

The system should automatically validate the input against the schema when creating a new facility, whether through an API or a customer-facing form. Missing required fields, invalid data types, or unknown flags should trigger an error and prevent propagation. Silent errors here lead to broken fulfillment later.

Without schema enforcement, you’re not building a system; you’re managing tribal knowledge, and that always breaks at scale.

Connecting Location Data to Subscription Behavior

Structured data is meaningless unless the rest of your stack uses it. Every subscription profile created in the system must be explicitly linked to a facility. That means embedding the facility’s location ID inside the subscription object and resolving all fulfillment and contact logic from that reference.

The delivery address, contact info, product mix, reorder frequency, and even PO behavior should all cascade from the facility data. If that data changes—a site closes, a new location comes online, contact names shift—the subscription logic must inherit those changes without requiring a rep to step in.

If your subscription engine doesn’t support custom fields or external identifiers natively, you’ll need to extend it with middleware. That middleware becomes the connective tissue between BigCommerce’s limited schema and the broader system logic you need to operate correctly. It tracks what’s tied to what and ensures that updates flow cleanly from data to execution.

Making Location Records Editable Without Breaking Integrity

Customers should be able to manage their own facility data, but only within guardrails. In your customer portal or management UI, give users the ability to add new locations, edit existing ones, and archive inactive sites. But every change needs to pass through the same schema validation you use internally. That keeps the data clean and the behavior predictable.

Certain changes may warrant internal review. For instance, adding a new facility in a restricted geography or changing the delivery cadence beyond contractual thresholds. Those events can trigger internal alerts or enter an approval queue, depending on your operational model. The important thing is that the system enforces structure without relying on your team to manually babysit every entry.

Keeping BigCommerce in Sync with the System of Record

BigCommerce is the transactional interface, not the master database. Your ERP, CRM, or PIM should be the authoritative source of customer and facility data. That means you need a sync layer that pushes clean, validated updates into BigCommerce on a regular basis, ideally via event triggers, not just nightly batch jobs.

If a facility gets renamed in the ERP, that change should be reflected in the BigCommerce metafields. If a location is deprecated or merged with another, its subscriptions should be paused, retired, or reassigned without human intervention. This is where most builds fail: they treat BigCommerce as the primary source of customer data, then struggle to keep other systems aligned. That creates drift, breaks trust, and guarantees downstream errors.

What you’re building is a distributed system where BigCommerce plays one role: execution. But the system only works when the location data inside it is current, structured, and treated as operational infrastructure.

Designing Subscription Order Flows That React to Location Logic

A subscription system is only as good as its ability to generate orders that behave correctly, not just financially, but operationally. That means every order must reflect the specific rules of the facility it’s intended for. The logic behind what gets shipped, where it goes, and when it arrives must be driven entirely by structured location data, not assumed from account-level defaults or inferred from order history.

When a subscription is triggered, whether through a scheduled cadence or a manual override, the system must treat it as an execution event scoped to a specific site. The parent customer account serves for authentication and billing, but the fulfillment logic, delivery instructions, and metadata must come from the facility configuration. Address, contact, PO formatting, delivery cadence, and SKU mix—all of that must be resolved in real time from the facility record tied to the subscription profile. Anything short of that creates downstream risk.

The timing logic must be accurate, too. You can’t rely on generic 30-day repeat intervals. The system needs to store and calculate each facility’s schedule based on its own consumption rhythm, seasonal shifts, and operational constraints. The correct cadence isn’t just a static number, it’s determined by past shipment history and future fulfillment windows. The system must track the last delivery date and the next trigger date and account for any interruptions or manual overrides without throwing off the overall cycle.

When buyers adjust a subscription, the system must apply that change only to the location in question. There should be no risk of unintended consequences for other sites under the same account. Each facility’s subscription profile should be a contained logic object: self-governing, independently updateable, and versioned. A change should only affect that profile, and only from the appropriate point in the cycle forward. The system should clarify whether changes apply immediately or at the next scheduled run. Anything less introduces ambiguity, and that’s unacceptable at scale.

Once the subscription fires and an order is generated, the fulfillment engine must route that order using the correct contextual data. This is not just a shipping address, it’s routing instructions, compliance flags, regional restrictions, and site-specific handling requirements. If the receiving facility requires dock scheduling, that data needs to be included in the order payload. If the product requires HAZMAT documentation, it must be tagged at the SKU level and activated based on destination metadata. If a region restricts certain products, the system should either block the order or route it through an approved alternate flow.

The order must also be traceable. Your team should be able to look at any subscription-generated order and identify exactly which subscription profile created it, what the rules were at the time of generation, and how the system interpreted those rules. This is not optional. If a shipment goes wrong, traceability determines whether your platform is trusted or blamed. That means embedding the subscription ID, location ID, and configuration snapshot directly into the order object, even if that data isn’t shown in the admin UI.

The goal of all of those measures is simple: predictable behavior. Subscription systems exist to remove guesswork. If your system can’t consistently generate orders that reflect the structure of your customer’s operation, you haven’t built a subscription engine — you’ve built a liability.

How Facility-Based Subscription Logic Enhances Customer Satisfaction in B2B

When your subscription system mirrors how your customers actually operate, treating each facility as a node within a unified account, you streamline internal workflows and significantly enhance customer satisfaction.

You no longer need to onboard each facility separately. The buyer doesn’t have to create multiple logins, juggle accounts, or email reps to update shipping details. Every location-specific order stream lives inside one unified structure. The purchasing team at HQ gets full visibility into what each site is receiving, while individual site managers retain autonomy over the specific deliveries they’re responsible for.

On your side, internal complexity starts to collapse. Your system simply reflects the truth of your customers’ operations, eliminating the need to field support tickets about “why did this order go to the wrong address” or “how do I pause just one subscription for one location.” Sales reps stop manually managing subscription exceptions in spreadsheets. Support teams stop digging through emails to resolve fulfillment confusion. Compliance doesn’t need to chase paper trails when an auditor asks for purchase history by site. The system already knows.

Finance also reaps advantages. With facility logic embedded, all orders can roll up into a unified invoicing stream without losing the underlying structure. You can issue one invoice per parent account while still breaking out charges and deliveries by facility. This reduces billing disputes, improves payment cycles, and gives procurement teams exactly what they need for internal reconciliation. It also makes budgeting and forecasting more accurate, because subscription behavior is no longer opaque; it’s predictable and traceable, down to the site level.

The change shows up in how customers perceive your platform. You stop being “the supplier that ships stuff” and start becoming infrastructure. When your subscription engine matches their inventory and operations, your platform becomes an extension of their workflows. That’s the difference between retention and churn, between being viewed as a commodity vendor and a strategic partner.

And the best part? This architecture is repeatable. Once built, it doesn’t just serve one enterprise client. It becomes the backbone for every large account you onboard. You can replicate the same logic across your book of business. What used to be a custom implementation now becomes scalable infrastructure. You’re no longer solving the same problem fifteen different ways. You’re delivering a system that functions effectively in all areas where enterprise complexity arises.

Why Most Implementations Break Under Real-World Conditions

Most subscription implementations fail not because the team lacked effort, but because the architecture was modeled after assumptions that don’t hold in enterprise environments. They start with off-the-shelf tools built for B2C use cases and then try to force those tools into B2B complexity, patching gaps with manual effort, duct-taped logic, and hope.

The first major failure point is the one-size-fits-all approach to subscriptions. A developer turns on a recurring order plugin, links it to a set of SKUs, and assumes the job is done. But the moment a buyer from a multi-site customer tries to adjust a delivery for just one location, everything falls apart. Either they can’t make the change without altering every other subscription under their account, or worse, the system lets them make the change and applies it globally, breaking order flows for all locations downstream.

The second common failure is treating each location as its own customer. In an attempt to localize subscriptions, implementation teams spin up individual accounts for every facility. It seems like a shortcut, one site, one login, and one subscription. But that model fragments the customer experience. Procurement leads lose visibility across their organization. Invoicing becomes a mess of line items and disconnected purchase records. Site managers can’t see what other facilities are doing, and your internal teams can’t answer basic questions like “What’s the total contract value of this customer across all sites?” The structure satisfies the subscription logic, but it breaks everything else.

A third failure is underestimating the importance of metadata. Systems that don’t store facility identifiers, delivery logic, or change history end up relying on internal memory and support ticket history to resolve issues. When an order goes wrong, there’s no structured way to answer “why.” Subscription orders come in like any other, with no tags, no context, and no traceability. Fulfillment teams are left guessing. Support reps escalate issues because there’s no way to validate whether the system executed correctly. Over time, trust erodes, not just from your team, but from the client.

Even when architecture is solid, the final point of failure is often visibility. The buyer has no portal to manage location-level subscriptions. They can’t see what’s active, what’s paused, or what’s due next week. They rely on email threads, PDFs, or quarterly check-ins with your rep to know what’s happening. Without transparency, even a technically functional system feels broken. And without the ability to self-manage, buyers default to asking your team for help, turning a scalable feature into a manual service.

None of these failures are technical limitations. They’re design failures, the result of treating subscriptions as a billing problem rather than a workflow problem. The technology is flexible enough to support what’s needed. What breaks is the implementation mindset.

Scaling the Architecture Across Customers Without Rebuilding It Every Time

Once the system works for one enterprise customer, the instinct is to treat it as custom, a special solution for a high-value account. That’s a mistake. The entire point of architecting subscriptions around facility logic is to make complexity repeatable.

The structure doesn’t need to change per customer. The data does.

Each new account plugs into the same model: a parent record, a defined list of facilities, structured subscription profiles tied to each location, and rules for fulfillment and billing. The only variables are how many facilities they have, what they order, and how often. You’re not building new flows, you’re populating a framework.

Implementation gets faster, support tickets drop, and onboarding becomes procedural instead of exploratory. Instead of reinventing the workflow, your team configures known infrastructure, and your clients gain confidence in a system that feels mature, not improvised.

This is how you move from offering subscription features to delivering a subscription platform, one that supports scale without negotiation.

Multi-Location B2B Subscription Framework for BigCommerce

A structured system for managing facility-level procurement flows under a centralized customer account

Use this toolkit if you’re building subscription functionality for industrial, medical, or enterprise buyers with multiple sites, unique delivery cadences, and centralized contracts.

Why B2B Buyers Need a Location-Aware Portal

Most subscription failures aren’t due to billing problems—they break at the buyer interface. In multi-site procurement environments, buyers need control that matches their operational footprint.

Use the table below to clarify what B2B buyers expect from their subscription management UI:

| Buyer Need | Why It Matters | What to Build |

| Site-level visibility | Buyers must see what each facility is receiving and when | Dashboard segmented by facility |

| Scoped control | Changes to one facility shouldn’t affect others | Edit tools tied to facility-specific subscriptions |

| Real-time updates | Teams need immediate clarity on what’s active, paused, or changed | Subscription viewer with live status and cadence info |

| Multi-role access | HQ handles finance; sites manage fulfillment | Permissioning by user role or account group |

| Audit-ready logs | Procurement teams require change traceability | Full change history viewer per site |

This is not an optional experience upgrade. For enterprise clients, this structure is required for adoption.

Build Guardrails First, Then Features

Before launching subscription interfaces or automation, you need foundational system safeguards. Use this quick checklist to verify your system is ready to support self-service at scale:

Buyer Safety Checklist (Pre-Portal Readiness)

☐ Can the system prevent global changes from a site-level user?

☐ Is every subscription explicitly tied to a facility ID?

☐ Are changes tracked with actor, timestamp, and JSON diff?

☐ Can paused, modified, or cancelled subscriptions be traced retroactively?

☐ Is there schema validation on new locations and contact edits?

☐ Can the front end display error states or permission blocks clearly?

If any of these are “no,” a portal will amplify risks instead of reducing friction. That’s why audit tooling (like the Change Log) and scoped interfaces (like the Portal Table) come next—once these safeguards are built.

Portal Experience Planning Table

Plan your location-aware user interface for buyers managing complex subscriptions.

| Portal View | Behavior |

| Parent Account Dashboard | Lists all active facilities, total active subscriptions |

| Facility Subscription Panel | Shows SKUs, cadence, contact, next run, status (active/paused) |

| Modify Subscription Modal | Lets user edit frequency, quantity, next run date — scoped to facility only |

| Facility Management Panel | Add/edit locations with validation against schema |

| Subscription History Viewer | Shows full change log for that facility’s subscriptions |

Change Log + Audit Tracker Schema

Track all changes to subscription profiles and facility configurations for compliance and support.

| Field | Description |

| log_id | Unique identifier for each event |

| timestamp | UTC datetime of change |

| actor | System / buyer / support |

| type | facility_change / subscription_edit / pause / resume |

| facility_id | Target facility |

| subscription_id | Target subscription (if applicable) |

| changes | JSON diff of before/after |

| notes | Optional — explanation, ticket ID, etc. |

Implementation Checklist

Use this to validate readiness and performance of your architecture.

Customer Data

☐ Facility schema defined, documented, and enforced

☐ Each parent account contains a structured array of location objects

☐ Location ID used consistently across all linked systems

Subscriptions

☐ Each subscription profile maps to one facility

☐ Facility-level adjustments do not affect sibling facilities

☐ All subscription-generated orders carry correct facility metadata

Fulfillment

☐ Fulfillment system routes orders based on facility-specific logic

☐ Hazmat/doc rules triggered based on destination metadata

☐ Middleware handles fallback / overrides when data is incomplete

Buyer Experience

☐ Buyer portal supports viewing/editing subscriptions by facility

☐ Permissions scoped to prevent global edits from local users

☐ All updates validated against schema rules before commit

Compliance & Traceability

☐ Change log tracks every update with who/what/when

☐ Archived subscriptions retained for auditing

☐ Metadata versioned and accessible for downstream QA

Deployment Readiness Summary

| Domain | Score (0–3) | Notes |

| Customer Data | ||

| Subscription Logic | ||

| Fulfillment Routing | ||

| Portal Functionality | ||

| Compliance + Logging | ||

| ERP Sync + Integration |

This feature helps operations leaders or solution architects quickly assess the maturity of their systems and identify any gaps.

What to Build Next — If You’re Serious About Owning the B2B Subscription Stack

If you’ve already deployed facility-level subscription logic you’re ahead of 98% of the companies trying to solve B2B ecommerce with off-the-shelf tools. But staying ahead means turning that infrastructure into leverage. Not just a feature set. A platform.

Start with cross-location analytics. Your largest clients don’t just want fulfillment, they want oversight. Create dashboards that display the shipments, pinpoint areas of increased inventory velocity, and identify locations that are not in sync. If they’re managing 30+ sites, they need exception reporting, not just exports.

Then introduce policy control. Let procurement leads define subscription boundaries: SKUs allowed per site, max monthly volume, and auto-pausing on overconsumption. Make it possible to lock behavior down by facility while keeping purchasing decentralized. That’s what large organizations are actually trying to solve.

Next, expose API-level access. If you want to be infrastructure, act like it. Let clients pipe your location-specific subscription data into their procurement systems, ERPs, or compliance platforms. If you force them to operate through your UI forever, you’ll cap your own relevance.

And finally: formalize it. Give this system a name. Wrap it with onboarding SOPs, internal training, and a real sales motion. Stop treating it like a smart workaround. You’ve built something most B2B ecommerce platforms can’t deliver—even with custom dev teams and enterprise licenses.

If you’re ready to turn this into a competitive moat, contact us; we’ll help you do it right.

Once formalized, this infrastructure becomes the cornerstone of your marketing strategies—positioning your platform as an operational advantage for enterprise buyers, not just another e-commerce tool.